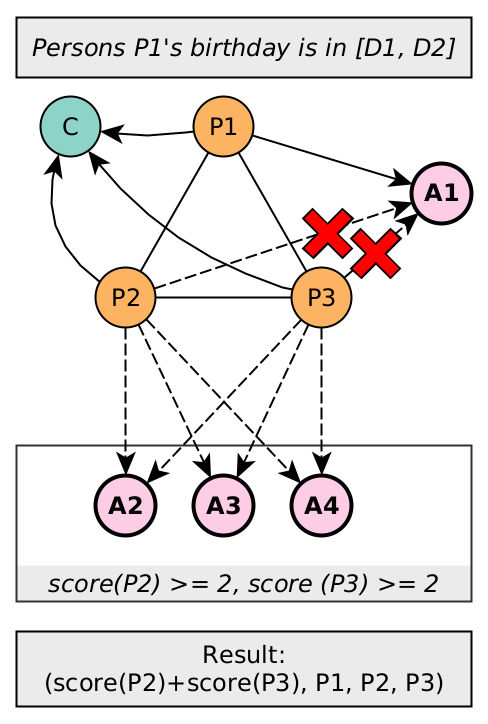

The marketeers of a social network have been data mining the musical preferences of their users. They have built statistical models which predict given an interest in say artists A2 and A3, that the person would also like A1 (i.e. rules of the form: A2 and A3 => A1). Now, they are commercially exploiting this knowledge by selling targeted ads to the management of artists who, in turn, want to sell concert tickets to the public but in the process also want to expand their artists' fan-base.

The ad is a suggestion for person P1 who is already interested in artist A1 to buy concert tickets of this artist with a discount (three tickets for the price of two) with the goal of introducing the artist to two friends ("who we know will love it" - the social network says) on the occasion of his/her birthday. Persons P2 and P3 must be friends themselves and must live in the same city as P1. The goal of this ad campaign is to extend the fan base of artist A1. Therefore, we require that P2 and P3 are not yet interested in A1 but are interested in some of the other artists A2, A3 and A4, ones that the data mining model predicts to be correlated with A1.

The dataset of this assignment is a slightly modified subgraph generated by the LDBC social network benchmark, which models a social network much like Facebook. This is a synthetically generated dataset, but it contains complex patterns and correlations that are also found in real life. For instance, people from a certain country often have a name that is typical for that country. Also, people who grew up in the same location or have similar interests are much more likely to know each other than random persons. The original dataset contains a lot of information on where the persons studied or work, as well as their wall posts, pictures, and likes etc. However, for this task, we only operate on users, their birthday and location attributes, and their "knows" and "interest" graphs.

Every user account in the social network is represented as a line in the person.csv file with the header defining the attribute names:

Person.id|firstName|lastName|gender|birthday|creationDate|locationIP|browserUsed|locatedIn 420|K.|Reddy|female|1981-09-08|2010-02-01T04:10:19.506+0000|14.102.233.188|Safari|152 7489|Shweta|Rao|male|1985-11-07|2010-03-27T18:13:25.167+0000|61.11.117.174|Internet Explorer|177 9037|Meera|Khan|female|1984-03-17|2010-03-14T07:06:54.725+0000|59.176.223.143|Chrome|178 12860|Aditya|Sharma|female|1982-08-20|2010-02-19T12:51:10.863+0000|103.1.115.239|Firefox|271 [...]

The last column (locatedIn) denotes cities; these are encoded as numbers here.

The social graph is formed by persons being vertices and edges entries in the "knows.csv" file, where each edge is represented by two Person IDs (knows.csv):

personId|friendId 420|12860 420|8150883 420|1099518059227 420|2199027829087 ...

From the first entry together with the information from person.csv, we see that person "K. Reddy" knows "Aditya Sharma".

The connections between people are bidirectional, i.e. if P1 knows P2, P2 also knows P1. The data set contains the edges in both directions.Finally, the social network knows for each person a number of favorite artists (musical interests). The interests are encoded in a separate "interest.csv" file as combinations of (personID, tagID), where tagID is a number and represents an artist:

personId|interest 420|1 7489|3 7489|138 7489|142 ...

There are approx. 16000 different artists. As in real life, the popularity of the artists is quite skewed.

Please be aware that the connection structure of this graph is highly complex and correlated. The graph is one huge connected component, and everyone knows everyone within 4 steps. While in a large network as Facebook (1.3 billion users) the average amount of friends is above 400, in smaller networks like here it is lower. The dataset you operate just has 8.9M persons (LDBC "scale factor" sf3000), who know on average 136 persons (and on average have 23 interests).

The CSV files for the sf100 dataset are linked below, but they are also included in the virtual machine for the practical in /opt/lsde/. Note that the files are split to allow parallel loading (e.g. by Spark).

You must implement, hence, a program that gets as parameters:

- a date range [D1..D2]

- target artist A1, and

- related artists A2, A3 and A4.

You can implement this query program in any way you like, but it better be efficient. We will test the query multiple times with different birthdate and artist parameters. After all, this is repetitive business for the social network marketeers, as time passes by and other people will have their birthday, and as they sell the idea of such an ad campaign to the managers of different artists.

In assignment 1a the competition is about writing a version of the query program that actually finishes within the benchmarking timeout on the large dataset. You will see that this is not so easy. The way to achieve this is to restrict the random access pattern that the naive implementation performs, separating the accesses in stages, such that in each stage only one part of the data structures is accessed randomly. Specifically, the check for bi-directional friendship, which randomly accesses the largest array (knows.bin) should be the last stage, because in the last stage there are only few candidate persons/friends in play left.

Rather than running on the textual CSV, you can prepare some better data representation of your choice. For this purpose, before the queries are run, a "reorg" program that you can provide, is executed. In assignment 1b, the reorg program should reduce the size of the data, where the most important hint is that only friendships between people from the same location are relevant. There are potentially more things that you might do in reorg that will help the queries getting faster (which you may also try).

In assignment 1c the data structures are now CSV files, which as you will see make the data size much bigger. Therefore, you should in assignment 1c convert these datasets to a better format (Parquet) and generally try to make Spark do the optimizations that you did manually before in assignment 1b.