UAI 2010 Invited Speakers

Banquet Speaker: David Stork, Ricoh Innovations

Computer vision and computer graphics in the study of fine art: New rigorous approaches to analyzing master paintings and drawings

Abstract

Mark Steyvers, University of California Irvine

The Wisdom of Crowds in the Aggregation of Rankings

Abstract

Cosma Shalizi, Carnegie-Mellon University

Markovian (and conceivably causal) representations of stochastic processes

Abstract

Christopher Langmead, Carnegie-Mellon University

Probabilistic Graphical Models for Structural Biology

Abstract

Abstracts

Banquet Speaker: David Stork

Computer vision and computer graphics in the study of fine art: New rigorous approaches to analyzing master paintings and drawings

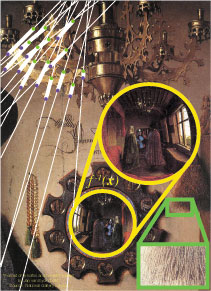

New rigorous computer algorithms have been used to shed light on a number of recent controversies in the study of art. For example, illumination estimation and shape-from-shading methods developed for robot vision and digital photograph forensics can reveal the accuracy and the working methods of masters such as Jan van Eyck and Caravaggio. Computer box-counting methods for estimating fractal dimension have been used in authentication studies of paintings attributed to Jackson Pollock. Computer wavelet analysis has been used for attribution of the contributors in Perugino's Holy Family and works of Vincent van Gogh. Computer methods can dewarp the images depicted in convex mirrors depicted in famous paintings such as Jan van Eyck's Arnolfini portrait to reveal new views into artists' studios and shed light on their working methods. New principled, rigorous methods for estimating perspective transformations outperform traditional and ad hoc methods and yield new insights into the working methods of Renaissance masters. Sophisticated computer graphics recreations of tableaus allow us to explore "what if" scenarios, and reveal the lighting and working methods of masters such as van Eyck, Parmigianino and Caravaggio.

How do these computer methods work? What can computers reveal about images that even the best-trained connoisseurs, art historians and artist cannot? How much more powerful and revealing will these methods become? In short, how is the "hard humanities" field of computer image analysis of art changing our understanding of paintings and drawings?

This profusely illustrate lecture for scholars interested in computer vision, pattern recognition and image analysis will include works by Jackson Pollock, Vincent van Gogh, Jan van Eyck, Hans Memling, Lorenzo Lotto, and several others. You will never see paintings the same way again.

Joint work with Antonio Criminisi, Andrey DelPozo, David Donoho, Marco Duarte, Micah Kimo Johnson, Dave Kale, Ashutosh Kulkarni, M. Dirk Robinson, Silvio Savarese, Morteza Shahram, Ron Spronk, Christopher W. Tyler, Yasuo Furuichi and Gabor Nagy

About the speaker Dr. David G. Stork is Chief Scientist of Ricoh Innovations. He is a graduate in physics of the Massachusetts Institute of Technology and the University of Maryland at College Park andstudied art history at Wellesley College and was Artist-in-Residence through the New York State Council of the Arts. He is a Fellow of the International Association for Pattern Recognition "...for the application of computer vision to the study of art," and has published seven books/proceedings volumes, including Seeing the Light: Optics in nature, photography, color, vision and holography (Wiley), Computer image analysis in the study of art (SPIE), Computer vision and image analysis of art (SPIE), HAL's Legacy: 2001's computer as dream and reality (MIT), and Pattern Classification , 2nd ed. (Wiley).

Mark Steyvers

The Wisdom of Crowds in the Aggregation of Rankings

When individuals recollect events or retrieve facts from memory, how can we aggregate the retrieved knowledge to best reconstruct the actual set of events or facts? We analyze the performance of individuals in a number of ranking tasks, such as reconstructing the order of historic events from memory (e.g. the order of US presidents) as well as predicting the rankings of sports teams. We also compare situations in which each individual in a group independently provides a ranking with an iterated learning environment in which individuals pass their rankings to the next person in a chain.

We aggregate the reported rankings across individuals using a number of graphical models, including variants of Mallows model, Thurstonian models, and the Perturbation model. The models assume that each individual's reported ranking is based on a random permutation of a latent ground truth and that each individual is associated with a latent level of knowledge of the domain. The models demonstrate a wisdom of crowds effect, where the aggregated orderings are closer to the true ordering than the orderings of the best individual. The models also demonstrate that we can recover the degree of expertise of each individual, in the absence of any explicit feedback or access to ground truth.

Cosma Shalizi

Markovian (and conceivably causal) representations of stochastic processes

Basically any stochastic process can be represented as a random function of a homogeneous, measured-valued Markov process. The Markovian states are minimal sufficient statistics for predicting the future of the original process. This has been independently discovered multiple times since the 1970s, by researchers in probability, dynamical systems, reinforcement learning and time series analysis, under names like "the measure-theoretic prediction process", "epsilon machines", "causal states", "observable operator models", and "predictive state representations". I will review mathematical construction and information-theoretic properties of these representations, touch briefly on their reconstruction from data, and finally consider under what conditions they may allow us to form causal models of dynamical processes.

Christopher Langmead

Probabilistic Graphical Models for Structural Biology

Some of the most important and challenging problems in biology concern the structure and dynamics of biological macromolecules (e.g., proteins, DNA). The field of Structural Biology lies at the intersections of molecular biology, biophysics, and biochemistry and is devoted to revealing the mechanisms governing biological processes (e.g., regulation, recognition, signaling, transport, self-assembly and catalysis). One of the most important mechanisms is the ability for molecules to sample different geometric conformations. Indeed the structures of biological macromolecules are more accurately described as multivariate distributions over atomic configurations, rather than as a fixed set of points. Probabilistic graphical models (PGM) are ideally suited to encoding these distributions and to solving inference problems of critical importance to biologists, pharmaceutical companies, and protein engineers. In this talk, I will first review the objectives and challenges encountered in Structural Biology, and then survey some recent results illustrating the power and elegance of PGM-based solutions. I will conclude with a discussion of some of the open problems in the field and the modeling and algorithmic challenges they present. The talk is intended for a general audience and no prior background in Structural Biology is needed.