When is a Big Data problem really "big"? Certainly questions of size are relative. The social network data you have to work on here is in absolute terms not very big: 9 million persons; while Facebook has more than 100 times as many users, and the data you work on has all posts, likes and pictures stripped away, while this is where most of the data volume in social networks is. What is left over here, the "social graph" of who knows who, is just 5GB of compressed data. One purpose of this practical is to show you that many Big Data problems are not really big problems and can be solved with simple hardware, if one would only stop and think for a minute about a reasonably efficient solution.

This practical aims to let students think about the performance of computational tasks on large datasets. The social network query you must implement, requires to think about the data, its data distributions, as well as algorithms to navigate this data. An understanding of the interaction between data an algorithms is not only useful in order to solve problems that seem big with simple hardware, but is also crucial to analyze and optimize the really big Big Data problems that we later will address with cluster solutions. Without such insight, one can have a ten thousand node cluster and still run aground.

One issue that makes data management problems potentially "big" problems is the complexity of the task. High complexity can come in the form of algorithms that are CPU intensive (either many cycles per data element, or quadratic in terms of data size or worse), and/or in the shape of "difficult" access patterns. The access pattern to a dataset can be sequential or scattered (random) in which case both temporal and physical locality will determine how expensive this access is going to be. You will find that there is a large difference, certainly when managing datasets larger than RAM, between sequential access, and random access without locality.

It is also important to think about what part of the data is actually needed, and which is not (could potentially be thrown away). Because you have the opportunity to build a data structure of your choice in the data loading phase, thinking about this is certainly worthwhile.

There is no obligatory language in which you have to program, however we recommend using C or C++. Java might also work, but it will eat much more memory - of which you have little - if you do not take care (i.e. put everything as basic types in arrays and avoid using objects and standard hashmaps). Scripting languages like python are much slower and will not make you win this competition and might not even allow you to make the maximal benchmarking timeout. So, certain programming skills come in handy. We do provide an example C implementation, and the changes needed to turn that into a viable solution are limited.

Further, we require you to use git for version control over your software solutions and suggest to use github as a respository. Using git is part of the basic skill-set of any computer scientist, so be grateful ;-)

In the lecture I will talk about a number of advanced data management techniques, such as columnar storage, vectorized execution (that easily benefits from SIMD instructions) and discuss inverted lists and e.g. bloom filters. This practicum provides opportunity to employ these in order to enhance the speed of your solution (and win the competition). Such advanced techniques are not required, though, to create an acceptable solution.

To make this assignment even more fun, there is a competition between the practicum groups to see who can make the fastest solution. The prize is getting to choose your topic for Assignment 2. The leaderboard is automatically updated by our evaluation infrastructure, which regularly polls your github code repositories for new commits.

In the practical we force you to use a relatively small machine, actually a virtual machine, to manage this social network dataset. This virtual machine runs Linux, and you can fire it up on your own laptop in VirtualBox regardless whether you use MacOS, Windows or Linux.

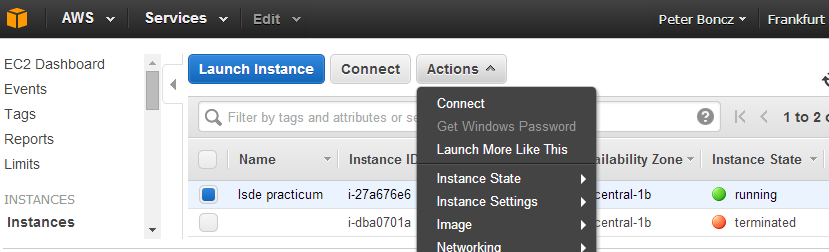

Alternatively, you can run in the cloud. For many of you this might be the first encounter with utility computing. We prepared a VM image to use in the Amazon EC2 free tier.

[an error occurred while processing this directive]